In the fast-paced world of technology, crafting a robust and efficient system isn't just about writing code; it's about meticulously planning and integrating various key components & system design elements into a cohesive, functional whole. Think of it as an architect designing a skyscraper: every beam, every pipe, every electrical circuit must be planned with precision to ensure the building stands strong, serves its purpose, and adapts to future needs. Without a solid blueprint and carefully selected materials, even the most innovative concept can crumble under pressure.

This comprehensive guide will demystify the core building blocks of system design, helping you understand how to create systems that aren't just functional, but also scalable, reliable, maintain secure, and delightful to use.

At a Glance: Building Blocks for Robust Systems

- Architecture is Your Blueprint: Choose the right structural pattern (monolithic, microservices, serverless) to define how your system functions.

- Database is Your Memory: Design efficient data storage, whether SQL or NoSQL, to manage information critical to your application.

- APIs are Your Language: Define how different parts of your system and external services communicate seamlessly.

- Caching is Your Speed Boost: Store frequently accessed data closer to users to reduce latency and improve performance.

- Load Balancing is Your Traffic Cop: Distribute incoming requests across servers to prevent bottlenecks and ensure high availability.

- Security is Your Shield: Implement multi-layered defenses to protect data and user privacy from threats.

- Scalability is Your Growth Plan: Design your system to handle increasing user demand and data volume effortlessly.

- Redundancy is Your Backup: Duplicate critical components to ensure continuous operation even when failures occur.

- Monitoring is Your Health Check: Keep a watchful eye on system performance and identify issues before they impact users.

- UX is Your User's Smile: Remember that even backend design choices impact the end-user experience.

- Design for the Future: Always consider requirements, potential failures, and maintainability from day one.

Why System Design is Your Secret Weapon

Every application, from a simple mobile app to a complex enterprise platform, is a system. And every system, no matter its size, benefits immensely from thoughtful design. System design is the crucial, often overlooked, phase where you translate abstract user requirements into a concrete technical blueprint. It’s the difference between a house built on sand and one built on a bedrock foundation. A well-designed system isn't just about meeting today's needs; it's about anticipating tomorrow's challenges, ensuring your solution remains relevant, performant, and secure long into the future. It directly impacts your system's reliability, cost-effectiveness, and your team's ability to innovate without constant firefighting.

The Blueprint: Essential Key Components of System Design

Let's dive into the core elements that form the backbone of any well-engineered system. Understanding each of these components is paramount to creating a solution that truly stands the test of time.

1. System Architecture: The Foundational Structure

The architecture is the macro-level design that defines the system's structure, behavior, and how its components interact. It's the highest-level plan, dictating everything from how data flows to how easily you can scale. Choosing the right architectural pattern is one of the most critical decisions you'll make, as it profoundly impacts complexity, development speed, and long-term maintainability.

- Monolithic Architecture: A single, unified codebase where all components are tightly coupled. Think of it as a single large building housing all departments.

- Pros: Simpler to develop and deploy initially, easier debugging in a single process.

- Cons: Harder to scale individual components, can become unwieldy as it grows, technology stack is usually uniform.

- Microservices Architecture: Decomposing an application into a suite of small, independent services, each running in its own process and communicating via lightweight mechanisms (like APIs). This is like a city with many independent buildings, each serving a specific purpose.

- Pros: Highly scalable (individual services can scale independently), resilient (failure in one service doesn't bring down the whole system), technology diversity (different services can use different tech stacks).

- Cons: Increased operational complexity, distributed debugging challenges, requires robust communication and monitoring.

- Serverless Architecture: Developers write and deploy code in "functions," which are triggered by events, and the cloud provider dynamically manages the server infrastructure. You only pay for the compute time consumed.

- Pros: No server management, auto-scaling, pay-per-execution cost model, faster deployment.

- Cons: Vendor lock-in, potential for "cold starts" (latency when a function hasn't been recently invoked), complex local testing.

Making the Choice: Your architectural decision should align directly with your application's specific requirements regarding complexity, expected load, team size, and deployment needs. A simple internal tool might thrive as a monolith, while a large-scale consumer-facing application would likely benefit from microservices or serverless patterns.

2. Database Design: The Data Heartbeat

The database is where your application's crucial information resides, making its design a cornerstone of system performance, scalability, and integrity. Whether you opt for a relational (SQL) or non-relational (NoSQL) database, careful consideration of your data models, relationships, and access patterns is non-negotiable.

- SQL Databases (e.g., PostgreSQL, MySQL, SQL Server): Structured, table-based databases with predefined schemas, ideal for applications requiring strong data consistency, complex transactions, and clear relationships between data entities.

- Key Considerations:

- Normalization: Organizing data to reduce redundancy and improve data integrity. While often beneficial, over-normalization can lead to complex joins and slower queries.

- Indexing: Creating indexes on frequently queried columns dramatically speeds up read operations.

- Relationships: Carefully define one-to-one, one-to-many, and many-to-many relationships.

- NoSQL Databases (e.g., MongoDB, Cassandra, Redis): Offer flexible schemas, making them suitable for handling large volumes of unstructured or semi-structured data, and for applications requiring high scalability and availability over strict consistency.

- Key Considerations:

- Data Models: Choose between document, key-value, columnar, or graph models based on your data structure and access patterns.

- Denormalization: Often used to optimize read performance by duplicating data, which can simplify queries but requires careful handling of data consistency.

- Distributed Nature: Many NoSQL databases are designed for horizontal scaling across multiple servers.

Optimization is Key: Regardless of your choice, proper indexing is critical for efficient queries. Understanding common query patterns helps you structure your data for optimal performance. Regularly reviewing and optimizing your database schema as your application evolves is a continuous process.

3. APIs & Communication: The Connective Tissue

APIs (Application Programming Interfaces) are the language your system components use to talk to each other and to external services. A well-defined API is like a clear instruction manual, ensuring seamless interaction and robust integration. The choice of communication protocol dictates speed, flexibility, and simplicity.

- REST (Representational State Transfer): The most common architectural style for networked applications, leveraging standard HTTP methods (GET, POST, PUT, DELETE) for stateless communication.

- Pros: Widely understood, uses standard HTTP, highly flexible.

- Cons: Can lead to "over-fetching" or "under-fetching" data, multiple round trips for complex data graphs.

- GraphQL: A query language for APIs and a runtime for fulfilling those queries with your existing data. It allows clients to request exactly the data they need, no more, no less.

- Pros: Reduces over-fetching, single endpoint for all data, strong typing improves development experience.

- Cons: Can be more complex to set up, caching can be more challenging than REST.

- gRPC: A high-performance, open-source universal RPC (Remote Procedure Call) framework. It uses Protocol Buffers for defining service interfaces and message structures, making it highly efficient.

- Pros: Very high performance, language-agnostic, excellent for inter-service communication in microservices architectures.

- Cons: Steeper learning curve, requires code generation, less human-readable than REST.

Fault-Tolerant Communication: In distributed systems, communication isn't always reliable. Designing for fault tolerance means implementing strategies like: - Retries: Automatically re-attempting failed requests.

- Timeouts: Setting limits on how long to wait for a response.

- Circuit Breakers: Preventing an application from repeatedly trying to execute an operation that is likely to fail, thus saving resources.

4. Caching: The Performance Accelerator

Caching is a critical component for drastically improving system performance and reducing the load on your database and other backend services. By storing frequently accessed data in a faster, more accessible memory layer, you significantly cut down on the need to fetch data from slower primary storage.

- How it Works: When a request comes in, the system first checks the cache. If the data is found (a "cache hit"), it's returned immediately. If not (a "cache miss"), the system fetches the data from the original source, serves it, and then stores it in the cache for future requests.

- Types of Caching:

- Client-side Caching (Browser Cache): Stores static assets (images, CSS, JS) on the user's browser.

- CDN Caching (Content Delivery Network): Distributes content across geographically dispersed servers to serve users from the closest location.

- Application-level Caching: In-memory caches within your application (e.g., using Redis, Memcached) to store frequently accessed data or computed results.

- Database Caching: Some databases have built-in caching mechanisms.

The Challenge of Stale Data: The biggest challenge with caching is ensuring data freshness. Implementing robust cache invalidation strategies is crucial to prevent users from seeing outdated information. This might involve: - Time-to-Live (TTL): Data expires after a set period.

- Event-driven Invalidation: Data is explicitly removed from the cache when its source data changes.

- Write-through/Write-back: Strategies for writing data to both the cache and the primary store.

5. Load Balancing: The Traffic Cop

Imagine a popular restaurant with only one chef. At peak hours, customers would wait forever. A load balancer is like a skilled maître d' who directs incoming customers to multiple available chefs, ensuring no single chef is overwhelmed and everyone gets served promptly. In system design, load balancing distributes incoming network traffic across a group of backend servers, improving availability, reliability, and performance.

- Benefits:

- Increased Availability: If one server fails, traffic is redirected to healthy servers.

- Improved Performance: Prevents any single server from becoming a bottleneck, leading to faster response times.

- Scalability: Allows you to easily add or remove servers as demand fluctuates.

- Common Strategies:

- Round Robin: Distributes requests sequentially to each server in a list.

- Least Connections: Directs traffic to the server with the fewest active connections.

- Weighted Load Balancing: Prioritizes servers with more capacity or better performance.

- IP Hash: Directs requests from the same client IP address to the same server, useful for maintaining session stickiness.

Load balancers are essential for any system expecting significant user traffic, providing a critical layer of resilience and efficiency.

6. Security: The Unbreakable Shield

Security isn't a feature you bolt on at the end; it must be an integral part of every layer of your system design, from the very first line of code to the deployment environment. Neglecting security can lead to catastrophic data breaches, loss of trust, and severe legal repercussions.

- Key Security Aspects:

- Data Protection: Secure data in transit (e.g., using HTTPS/TLS) and at rest (encryption for databases and storage).

- Authentication: Verifying the identity of users and systems (e.g., passwords, multi-factor authentication, OAuth).

- Authorization: Determining what an authenticated user or system is allowed to do (e.g., role-based access control, permissions).

- Input Validation: Protecting against common vulnerabilities like SQL injection and cross-site scripting (XSS) by sanitizing all user inputs.

- Protection Against DoS/DDoS: Implementing measures to mitigate Denial of Service attacks.

- Secure Coding Practices: Following best practices to avoid common software vulnerabilities.

- Regular Audits and Updates: Continuously monitor for new threats, perform security audits, and apply patches promptly.

Prioritizing security from the ground up, rather than treating it as an afterthought, saves immense headaches and costs in the long run.

7. Scalability & Performance: Growing with Grace

A system's ability to handle increasing workloads (scalability) and respond quickly (performance) are critical for user satisfaction and business growth. Ignoring these aspects can lead to slow, unresponsive applications that alienate users and damage your reputation.

- Scalability: The ability of a system to handle a growing amount of work by adding resources.

- Vertical Scaling (Scaling Up): Upgrading existing servers with more CPU, RAM, or storage. Simpler but has limits.

- Horizontal Scaling (Scaling Out): Adding more servers to distribute the load. More complex to manage but offers near-limitless potential. This often involves stateless services that can run on any server.

- Performance Optimization: Focusing on reducing latency, improving response times, and ensuring efficient resource utilization.

- Code Optimization: Writing efficient algorithms, minimizing database queries, optimizing loops.

- Resource Management: Efficient use of CPU, memory, network, and disk I/O.

- Asynchronous Processing: Using message queues and background jobs for non-critical or time-consuming tasks to keep the main application responsive.

- Content Delivery Networks (CDNs): Bringing content closer to users globally.

Testing is Vital: Load testing and performance monitoring are indispensable tools for identifying bottlenecks, predicting system behavior under stress, and ensuring your system can cope with anticipated demand.

8. Redundancy & Fault Tolerance: The Safety Net

No system is infallible; hardware fails, networks go down, and software bugs appear. Designing for failures by incorporating redundancy and fault tolerance ensures your system remains available and reliable even when things go wrong.

- Redundancy: Duplicating critical components or functions. If one instance fails, another immediately takes its place.

- Examples: Running multiple web servers behind a load balancer, using RAID for disk storage, replicating databases across multiple nodes or data centers.

- Fault Tolerance: The system's ability to continue operating, perhaps in a degraded but still functional manner, despite failures.

- Examples:

- Automatic Failover: When a primary component fails, a backup automatically takes over.

- Graceful Degradation: The system continues to function with reduced features or performance during partial failures.

- Circuit Breakers (as mentioned in APIs): Isolate failing components to prevent cascading failures.

- Idempotent Operations: Operations that can be performed multiple times without changing the result beyond the initial application, critical for reliable retries.

Implementing these concepts is crucial for high-availability systems, especially for mission-critical applications where downtime is unacceptable. For example, ensuring your backup power systems are redundant is as important as having a reliable solar whole house generator for your home.

9. Monitoring & Logging: The System's Eyes & Ears

You can't fix what you can't see. Monitoring and logging are indispensable for understanding the health, performance, and behavior of your system. They provide the visibility needed to detect anomalies, diagnose issues, and proactively address problems before they escalate into user-facing outages.

- Monitoring: Collecting and analyzing metrics about system components (CPU usage, memory, network I/O, database query times, API response latency, error rates, etc.).

- Tools: Prometheus for metrics collection, Grafana for visualization and dashboards.

- Alerting: Setting up notifications for when critical metrics exceed predefined thresholds.

- Logging: Recording events and messages generated by the application, providing detailed chronological insights into what happened, when, and why.

- Tools: ELK Stack (Elasticsearch for storage and search, Logstash for data processing, Kibana for visualization), Splunk.

- Structured Logging: Logging data in a machine-readable format (e.g., JSON) makes it easier to query and analyze.

A well-designed monitoring and logging strategy is your early warning system, enabling rapid incident response and continuous improvement.

10. User Experience (UX): The Human Connection

While system design often focuses on the intricate backend, it’s vital to remember that all technical decisions ultimately impact the end-user experience. A technically brilliant system that is slow, confusing, or unreliable will fail to engage users.

- Performance Impacts UX: Fast response times, minimal latency, and smooth interactions are direct results of good system design choices (caching, load balancing, scalability).

- Reliability Impacts UX: Users expect systems to be available and functional. Fault tolerance and redundancy contribute directly to this.

- Security Impacts UX: Users need to trust that their data is safe. A secure system builds that trust.

- Intuitive Interfaces: While primarily a frontend concern, backend support for features like real-time updates, personalized content, and complex search capabilities are often enabled or limited by system design choices.

Always keep the user in mind. How will your architectural choices, database queries, and error handling affect their journey? A delightful UX is the ultimate measure of a system's success.

Mastering the Craft: Principles for Effective System Design

Beyond understanding the individual components, seasoned designers follow a set of guiding principles. These are the "rules of thumb" that help navigate complexity and build systems that truly last.

1. Deep Dive into Requirements

Before you draw a single box or arrow, truly understand what problem you're trying to solve. This means engaging deeply with stakeholders, clarifying ambiguities, and distinguishing between functional (what the system does) and non-functional requirements (how well the system does it – e.g., speed, security, scalability). Don't just list them; internalize them. A common pitfall is building a perfect solution to the wrong problem. The more you understand the "why," the better equipped you'll be to design the "how."

2. Designing for Future Growth

Even if your project starts small, assume success. Designing with scalability in mind from the outset can save you monumental headaches and costly refactors later. This doesn't mean over-engineering for hypothetical billions of users immediately, but rather choosing components and architectures that allow for horizontal scaling, anticipate increased data volume, and permit easy feature expansion. Think about the elasticity of your chosen components and if they support dynamic resource allocation.

3. Embracing Simplicity

Complexity is the enemy of reliability, maintainability, and understanding. Strive for the simplest possible design that meets all current and anticipated requirements. Avoid unnecessary features, overly complicated architectural patterns, or premature optimization. Simple systems are:

- Easier to Understand: New team members can onboard faster.

- Easier to Debug: Fewer moving parts mean fewer places for bugs to hide.

- Easier to Maintain: Changes and updates are less likely to break existing functionality.

- More Reliable: Less complexity often means fewer points of failure.

Sometimes, the most elegant solution is the one that looks the least impressive on paper but performs flawlessly in practice.

4. Anticipating Failure

It’s not if your system will fail, but when. Designing with failure in mind means accepting this inevitability and building mechanisms to recover gracefully. This involves:

- Identifying Single Points of Failure: Remove them wherever possible through redundancy.

- Implementing Retry Mechanisms and Timeouts: For external service calls.

- Building Circuit Breakers: To prevent cascading failures.

- Designing for Data Backup and Recovery: Essential disaster recovery plans.

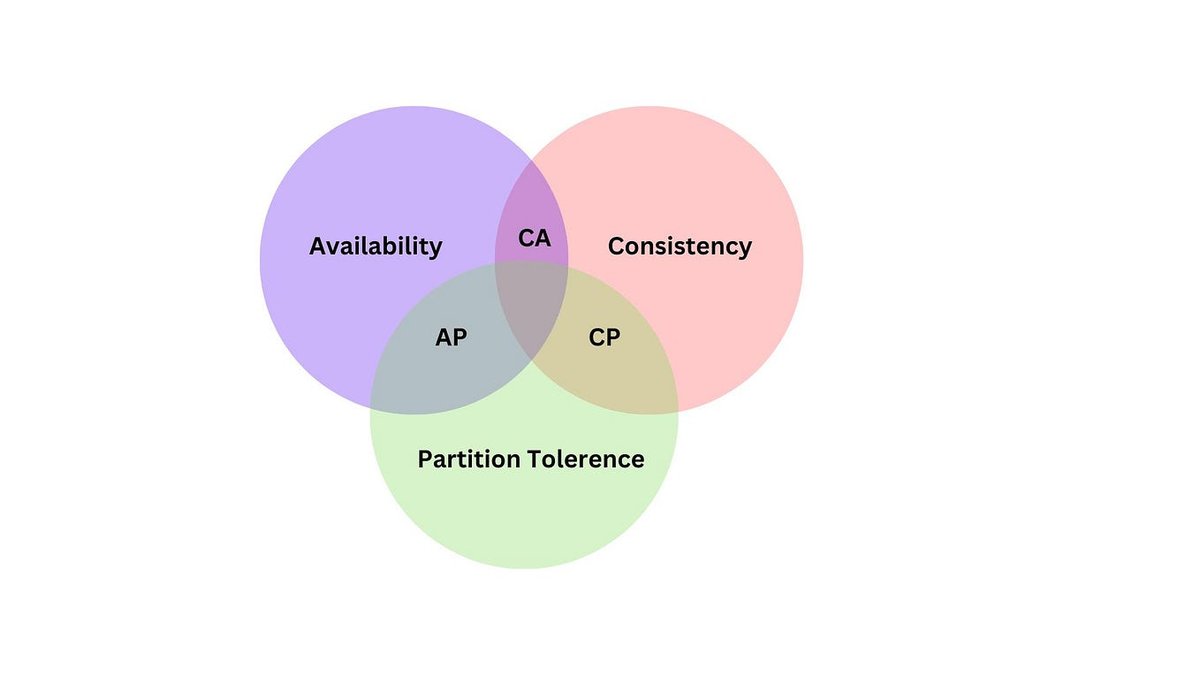

- Considering Network Partitions: How will your system behave if parts of it can't communicate?

A system that can withstand and quickly recover from failures is a truly resilient one.

5. Security First, Always

As emphasized earlier, security is not an afterthought. It must be woven into the fabric of your system from the initial design phase. This involves:

- Threat Modeling: Systematically identifying potential threats and vulnerabilities.

- Secure Defaults: Configuring all components with the highest security settings.

- Principle of Least Privilege: Granting users and services only the minimum necessary access.

- Regular Security Reviews: Incorporating security audits and penetration testing throughout the development lifecycle.

A proactive security posture is your best defense against evolving cyber threats.

6. Testing Early, Testing Often

A well-designed system still needs rigorous testing. Incorporate various types of tests throughout the development process:

- Unit Tests: Validate individual components or functions.

- Integration Tests: Verify communication and interaction between different system parts.

- End-to-End Tests: Simulate real user scenarios.

- Performance/Load Tests: Assess behavior under anticipated and peak loads.

- Security Tests: Look for vulnerabilities.

Continuous testing helps identify issues early, reduces the cost of fixing bugs, and ensures that new features don’t inadvertently break existing functionality.

7. Planning for Long-Term Maintainability

A system's lifecycle extends far beyond its initial deployment. It will need updates, bug fixes, and new features. Design with maintainability in mind by:

- Modular Architecture: Breaking down the system into independent, interchangeable modules.

- Clear Code and Naming Conventions: Making the codebase easy to understand.

- Version Control: Using systems like Git to track changes and facilitate collaboration.

- Automated Deployment: Streamlining the process of pushing changes to production.

A maintainable system is adaptable, making it easier to evolve with changing business requirements and technology landscapes.

8. The Power of Documentation

Good documentation is invaluable, serving as the collective memory of your project. Document:

- Architecture Decisions: Why certain choices were made.

- API Specifications: How services interact.

- Key Components: Their purpose and how they work.

- Deployment and Operational Guides: For system administrators.

Clear, up-to-date documentation reduces onboarding time for new team members, minimizes knowledge silos, and ensures consistency across development and operations teams. It transforms tacit knowledge into explicit, shared understanding.

9. Leveraging Proven Tools & Services

You don't need to reinvent the wheel for every problem. The modern software ecosystem offers a vast array of proven tools, frameworks, and cloud services that solve common challenges efficiently.

- Authentication & Authorization: Use established identity providers (e.g., Auth0, AWS Cognito, Google Firebase Authentication).

- Monitoring & Logging: Adopt industry-standard solutions (e.g., Prometheus, Grafana, ELK Stack).

- Databases & Caches: Leverage robust, battle-tested options (e.g., PostgreSQL, Redis, MongoDB).

Using existing, well-maintained solutions saves development time, reduces the risk of introducing bugs or vulnerabilities, and allows your team to focus on your core business logic rather than infrastructure.

10. The Value of Collaborative Feedback

Designing in isolation is a recipe for blind spots. Actively seek feedback throughout the design process from:

- Peers: Other engineers who can spot technical flaws or suggest alternative approaches.

- Stakeholders: To ensure the design aligns with business objectives.

- Users: Early user feedback can highlight usability issues or unmet needs.

Regular design reviews, discussions, and iterative refinement based on diverse perspectives lead to more robust, user-centric, and effective systems.

Building Beyond the Basics: What's Next?

Mastering the key components & system design principles isn't a one-time achievement; it's a continuous journey of learning and adaptation. The technological landscape constantly evolves, bringing new tools, paradigms, and challenges. The confidence you gain from understanding these foundational elements will empower you to tackle increasingly complex projects.

Start by applying these principles to your current work. Practice diagramming system architectures, debate database choices, and scrutinize security implications. Learn from existing robust systems and analyze their design decisions. The more you engage with these concepts, the more intuitive system design will become. The goal isn't just to build a system, but to build a reliable, maintainable, and scalable system that truly serves its purpose for years to come.